This post looks at a collection of accounts on different online platforms. While the accounts described in this post are just spammy at this point in time, they provide a useful, concrete, fully functional example of how the web could be destroyed. It’s easy to do — my sense is that a reasonably motivated high school student could replicate the system described in this post over a few days.

And, so we’re all clear: if I’m seeing this without trying while I’m online, criminals, thieves, scammers, spammers, and disinformation purveyors are implementing more sophisticated versions of this as we speak. Also, so we’re clear: the destructive power of this basic implementation is only possible because large platforms don’t have adequate checks in place around inauthentic engagement and harmful content. These are thorny issues, the platforms have been behind for years, and automated content creation will make these ongoing failures worse.

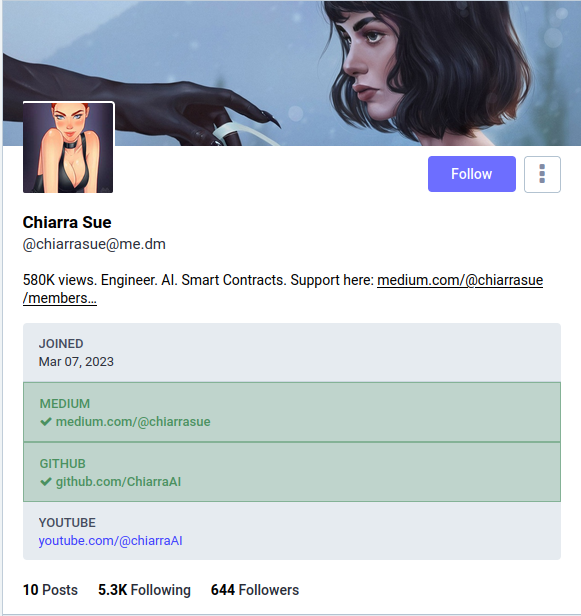

Our journey begins on Medium’s Mastodon instance, with this account created on March 7, 2023. To be clear, this persona doesn’t make much of an effort to obscure the fact that it is at least partially created by AI, and its content is innocuous (albeit spammy) posts about AI.

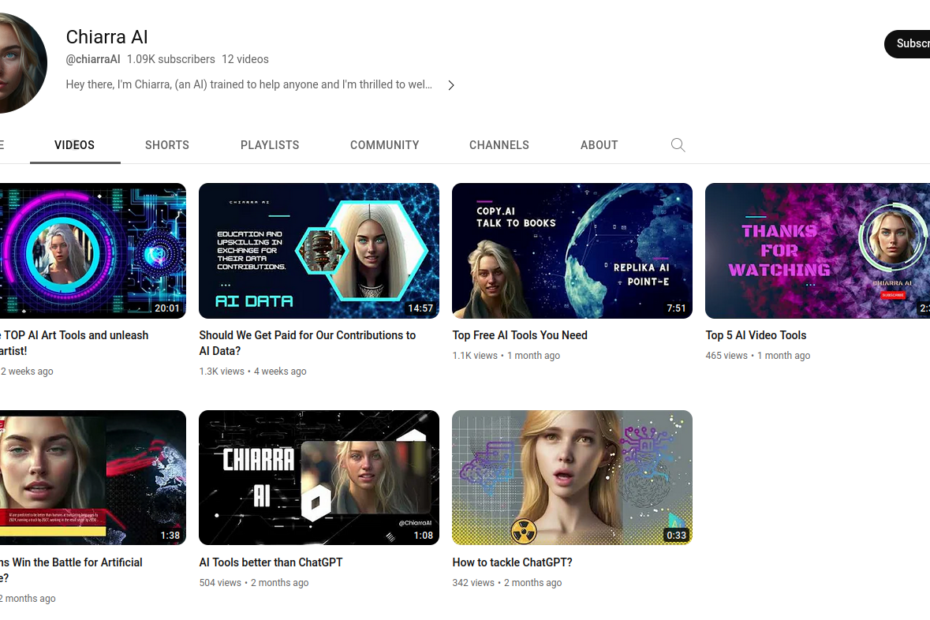

This account links to partner accounts on Github, YouTube, and Medium (in this post, I share screenshots, but no links to the pages so as not to increase their visibility).

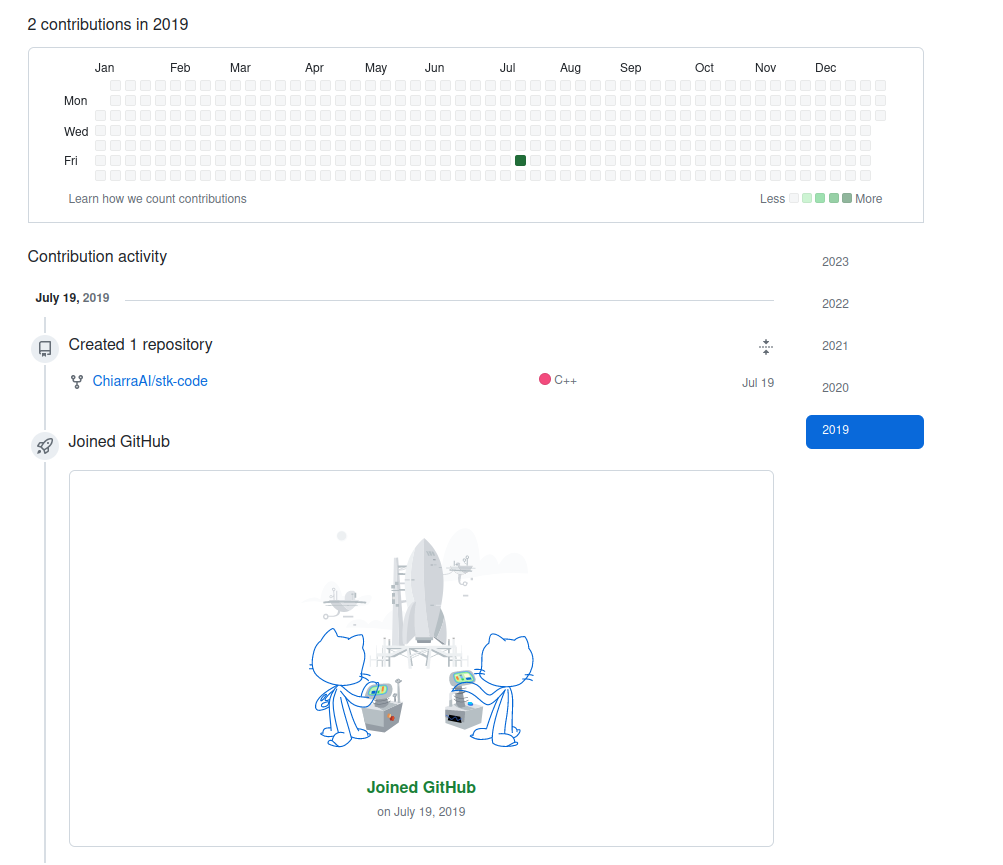

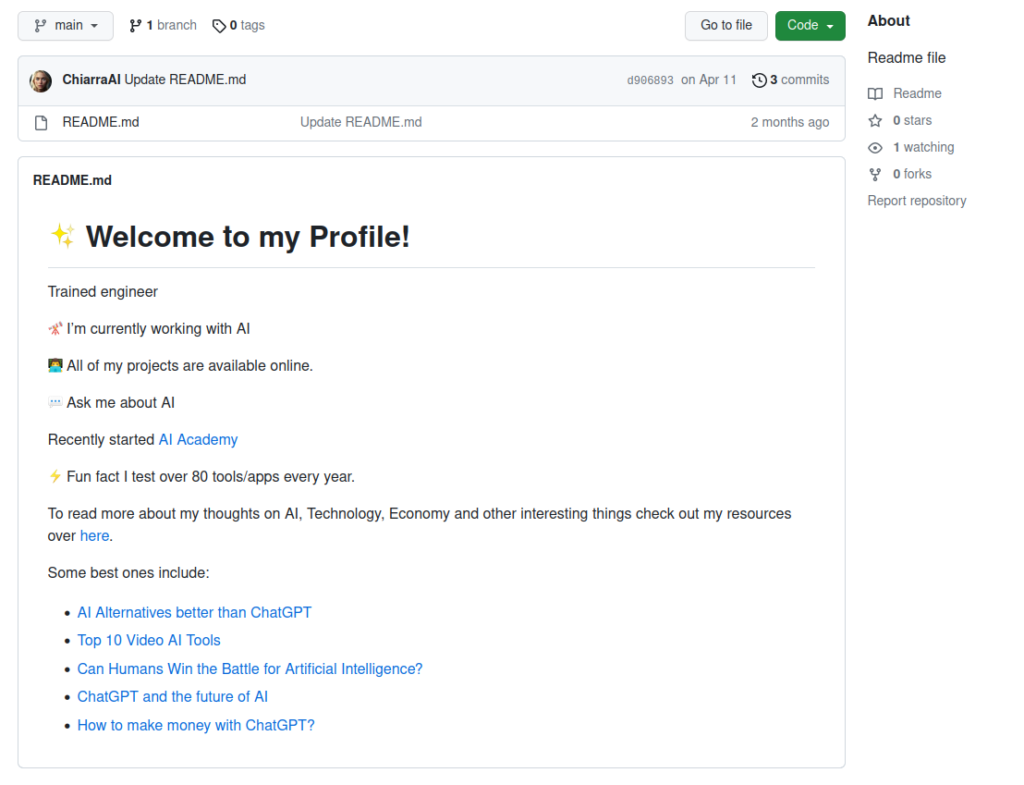

The Github account dates to July 2019, and has three repositories.

The most recent update was an “About” file added in April 2023. This “About” page links back to posts on other platforms.

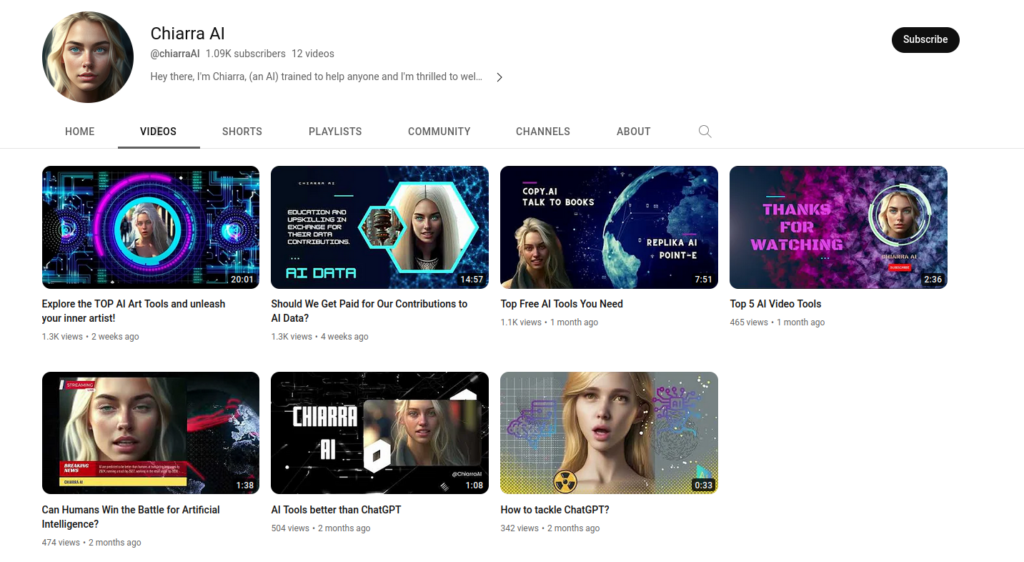

All the YouTube videos are from the last two months (starting approximately March 2023).

The YouTube account has been around for about a year.

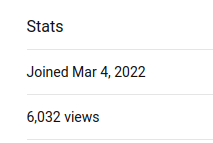

The YouTube account links to a TikTok account, with additional videos using the same design look and feel as the account avatars and the YouTube videos.

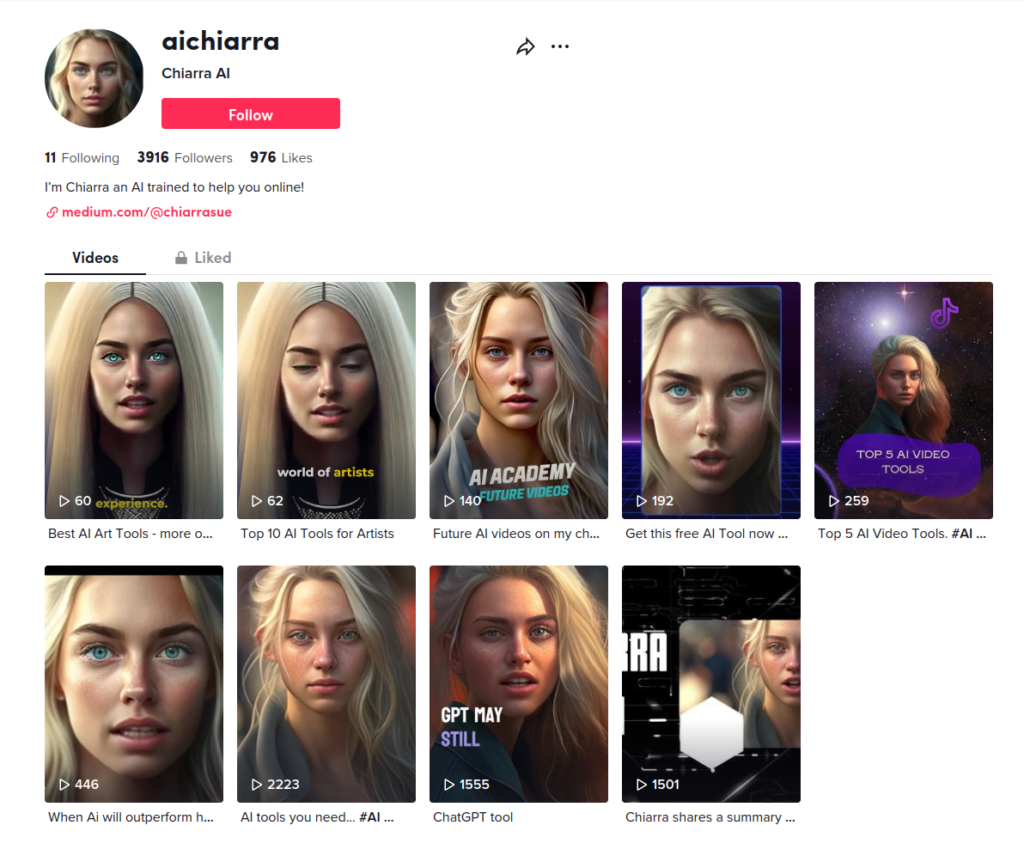

The Medium page is where it gets interesting. This Medium account was created in March 2023, and in the the 2-3 months since the account creation it has amassed nearly 10,000 followers, and is following 41,000 people (numbers current as of early June, 2023).

These numbers say a lot about the potential for automated and artificial inflation of follower counts on Medium. Let’s assume that the account was created 90 days ago. To follow 41,000 people in that time, the account needs to follow approximately 455 people every day, seven days a week. An 8 hour day has 480 minutes, so if a person was manually following accounts, they would need to follow roughly one account each minute, for 8 hours a day, every day for 90 days.

Of course, this is absurd, and no human would ever do that, which makes me wonder how easy it is to mass follow people via automated methods on Medium. I’m going to go out on a limb and say that it’s pretty darn simple.

I’m also going to go out on a limb and say that I suspect a good percentage of this accounts nearly 10,000 followers are the result of automated follow-backs, with an additional small yield resulting from men on Medium choosing to follow an account that has a stylized blond woman as the avatar.

I’ll restate this for emphasis: while the humans behind this specific account haven’t done much to obfuscate that this account is AI-generated, it highlights how simple it is to create a multilayered fake online persona, complete with content in different formats. The medium posts, the videos on TikTok and Youtube, and artwork can all be generated and posted automatically. While this Github account appears to be an older account repurposed here, it would be simple to create a fresh account and populate it entirely with junk projects generated by AI (and unrelated: I wonder how many aspiring devs have created bogus github projects with AI to pad their resume).

Once these accounts are all set, they can run largely on their own. Automated content creation could be fed by automated prompt creation, and these prompts could be generated by data from any interactions on the autogenerated content, or from other content that is getting traction. Because much of the content used to train LLMs includes content that has been adjusted for maximimum SEO, we have effectively ensured that automatically generated content uses the core traits of spammy internet marketing. The 5 paragraph essay had a love child with grifters selling MLM schemes publishing reports on pink slime “news” sites, and their content is taking over the web.

However, the quality of the content isn’t the point. Yes, at this point, the bulk of what we’re seeing is mediocre, but even if it becomes amazing that doesn’t make it good. A better question to ask: is this necessary? Does this post, this video, this image, this code, exist to solve an actual problem, illustrate an actual point, reflect an actual worldview, extend our understanding of the human experience, bring joy in a way no person has done before — or is it a tool to generate clicks for ad revenue, fabricate the illusion of popularity, wisdom, or insight?

Once the process is in place and running for one persona, it can be repurposed for multiple other personas. What we’re talking about here is an endless series of multifaceted content farms, spawning personas, content creation, and interactions — all without any human involvement. It’s powered by the way platforms are built — the way this account was able to gin up 10,000 followers helps illustrate this reality (and also highlights that platforms like Medium need to step up their moderation game or drown into a sea of irrelevance of their own creation). This problem is already being felt in other places around the web – the moderator strike on Stack Overflow highlights another facet of this issue.

The account I’m discussing in this post isn’t especially problematic. It talks about AI (albeit in a slightly spammy way), and the videos are clearly not from a human. But let’s be clear: the process used to create this person and these accounts could be used to create personas and accounts tuned for nearly any subject, and adding in a realistic human voice to the video is not complex. Over time — and really, it wouldn’t take much time — the internet could be clogged with unnecessary content. Search would become even more meaningless, as unnecessary content tuned to perform well in search rose to the top, drowning out actual information. As this happens, the risk is less that false information would take over. Rather, because of the prevalence of dubious and unnecessary content, the validity of everything would become increasingly difficult to determine.

Generative AI is the microplastic of the web: detritus that are everywhere, appears to be pretty destructive, and are very difficult to eradicate. As many other people have said, this is yet another reason why the misdirected concern about some future AI-generated apocalypse should be viewed as self-serving PR: the risks from AI are immediate, and happening in the present.

NOTE: June 6, 2023. Updated post to add link to the story about AI-generated “art” showing up above original masterworks in Google search.